Note

Go to the end to download the full example code.

1D Inversion of for a Single Sounding#

Here we use the module simpeg.electromangetics.frequency_domain_1d to invert frequency domain data and recover a 1D electrical conductivity model. In this tutorial, we focus on the following:

How to define sources and receivers from a survey file

How to define the survey

Sparse 1D inversion of with iteratively re-weighted least-squares

For this tutorial, we will invert 1D frequency domain data for a single sounding. The end product is layered Earth model which explains the data. The survey consisted of a vertical magnetic dipole source located 30 m above the surface. The receiver measured the vertical component of the secondary field at a 10 m offset from the source in ppm.

Import modules#

import os

import tarfile

import numpy as np

import matplotlib.pyplot as plt

from discretize import TensorMesh

import simpeg.electromagnetics.frequency_domain as fdem

from simpeg.utils import mkvc, plot_1d_layer_model

from simpeg import (

maps,

data,

data_misfit,

inverse_problem,

regularization,

optimization,

directives,

inversion,

utils,

)

plt.rcParams.update({"font.size": 16, "lines.linewidth": 2, "lines.markersize": 8})

# sphinx_gallery_thumbnail_number = 2

Download Test Data File#

Here we provide the file path to the data we plan on inverting. The path to the data file is stored as a tar-file on our google cloud bucket: “https://storage.googleapis.com/simpeg/doc-assets/em1dfm.tar.gz”

# storage bucket where we have the data

data_source = "https://storage.googleapis.com/simpeg/doc-assets/em1dfm.tar.gz"

# download the data

downloaded_data = utils.download(data_source, overwrite=True)

# unzip the tarfile

tar = tarfile.open(downloaded_data, "r")

tar.extractall()

tar.close()

# path to the directory containing our data

dir_path = downloaded_data.split(".")[0] + os.path.sep

# files to work with

data_filename = dir_path + "em1dfm_data.txt"

Downloading https://storage.googleapis.com/simpeg/doc-assets/em1dfm.tar.gz

saved to: /home/vsts/work/1/s/tutorials/07-fdem/em1dfm.tar.gz

Download completed!

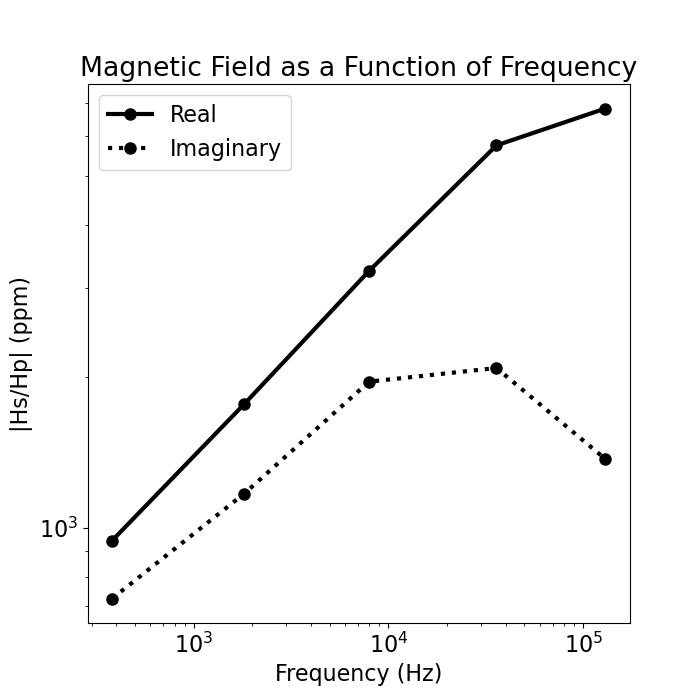

Load Data and Plot#

Here we load and plot the 1D sounding data. In this case, we have the secondary field response in ppm for a set of frequencies.

# Load field data

# dobs = np.loadtxt(str(data_filename))

dobs = np.loadtxt(str(data_filename), skiprows=1)

# Define receiver locations and observed data

frequencies = dobs[:, 0]

dobs = mkvc(dobs[:, 1:].T)

fig, ax = plt.subplots(1, 1, figsize=(7, 7))

ax.loglog(frequencies, np.abs(dobs[0::2]), "k-o", lw=3)

ax.loglog(frequencies, np.abs(dobs[1::2]), "k:o", lw=3)

ax.set_xlabel("Frequency (Hz)")

ax.set_ylabel("|Hs/Hp| (ppm)")

ax.set_title("Magnetic Field as a Function of Frequency")

ax.legend(["Real", "Imaginary"])

<matplotlib.legend.Legend object at 0x7f07eb294710>

Defining the Survey#

Here we demonstrate a general way to define the receivers, sources and survey. The survey consisted of a vertical magnetic dipole source located 30 m above the surface. The receiver measured the vertical component of the secondary field at a 10 m offset from the source in ppm.

source_location = np.array([0.0, 0.0, 30.0])

moment = 1.0

receiver_location = np.array([10.0, 0.0, 30.0])

receiver_orientation = "z"

data_type = "ppm"

# Receiver list

receiver_list = []

receiver_list.append(

fdem.receivers.PointMagneticFieldSecondary(

receiver_location,

orientation=receiver_orientation,

data_type=data_type,

component="real",

)

)

receiver_list.append(

fdem.receivers.PointMagneticFieldSecondary(

receiver_location,

orientation=receiver_orientation,

data_type=data_type,

component="imag",

)

)

# Define source list

source_list = []

for freq in frequencies:

source_list.append(

fdem.sources.MagDipole(

receiver_list=receiver_list,

frequency=freq,

location=source_location,

orientation="z",

moment=moment,

)

)

# Survey

survey = fdem.survey.Survey(source_list)

Assign Uncertainties and Define the Data Object#

Here is where we define the data that are inverted. The data are defined by the survey, the observation values and the uncertainties.

# 5% of the absolute value

uncertainties = 0.05 * np.abs(dobs) * np.ones(np.shape(dobs))

# Define the data object

data_object = data.Data(survey, dobs=dobs, noise_floor=uncertainties)

Defining a 1D Layered Earth (1D Tensor Mesh)#

Here, we define the layer thicknesses for our 1D simulation. To do this, we use the TensorMesh class.

# Layer thicknesses

inv_thicknesses = np.logspace(0, 1.5, 25)

# Define a mesh for plotting and regularization.

mesh = TensorMesh([(np.r_[inv_thicknesses, inv_thicknesses[-1]])], "0")

Define a Starting and/or Reference Model and the Mapping#

Here, we create starting and/or reference models for the inversion as well as the mapping from the model space to the active cells. Starting and reference models can be a constant background value or contain a-priori structures. Here, the starting model is log(0.1) S/m.

Define log-conductivity values for each layer since our model is the log-conductivity. Don’t make the values 0! Otherwise the gradient for the 1st iteration is zero and the inversion will not converge.

# Define model. A resistivity (Ohm meters) or conductivity (S/m) for each layer.

starting_model = np.log(0.1 * np.ones(mesh.nC))

# Define mapping from model to active cells.

model_mapping = maps.ExpMap()

Define the Physics using a Simulation Object#

simulation = fdem.Simulation1DLayered(

survey=survey, thicknesses=inv_thicknesses, sigmaMap=model_mapping

)

Define Inverse Problem#

The inverse problem is defined by 3 things:

Data Misfit: a measure of how well our recovered model explains the field data

Regularization: constraints placed on the recovered model and a priori information

Optimization: the numerical approach used to solve the inverse problem

# Define the data misfit. Here the data misfit is the L2 norm of the weighted

# residual between the observed data and the data predicted for a given model.

# The weighting is defined by the reciprocal of the uncertainties.

dmis = data_misfit.L2DataMisfit(simulation=simulation, data=data_object)

# Define the regularization (model objective function)

reg_map = maps.IdentityMap(nP=mesh.nC)

reg = regularization.Sparse(mesh, mapping=reg_map, alpha_s=0.025, alpha_x=1.0)

# reference model

reg.reference_model = starting_model

# Define sparse and blocky norms p, q

reg.norms = [0, 0]

# Define how the optimization problem is solved. Here we will use an inexact

# Gauss-Newton approach that employs the conjugate gradient solver.

opt = optimization.ProjectedGNCG(maxIter=50, maxIterLS=20, cg_maxiter=30, cg_rtol=1e-3)

# Define the inverse problem

inv_prob = inverse_problem.BaseInvProblem(dmis, reg, opt)

Define Inversion Directives#

Here we define any directiveas that are carried out during the inversion. This includes the cooling schedule for the trade-off parameter (beta), stopping criteria for the inversion and saving inversion results at each iteration.

# Defining a starting value for the trade-off parameter (beta) between the data

# misfit and the regularization.

starting_beta = directives.BetaEstimate_ByEig(beta0_ratio=1e1)

# Update the preconditionner

update_Jacobi = directives.UpdatePreconditioner()

# Options for outputting recovered models and predicted data for each beta.

save_iteration = directives.SaveOutputEveryIteration(save_txt=False)

# Directive for the IRLS

update_IRLS = directives.UpdateIRLS(max_irls_iterations=30, irls_cooling_factor=1.5)

# Updating the preconditionner if it is model dependent.

update_jacobi = directives.UpdatePreconditioner()

# Add sensitivity weights

sensitivity_weights = directives.UpdateSensitivityWeights()

# The directives are defined as a list.

directives_list = [

sensitivity_weights,

starting_beta,

save_iteration,

update_IRLS,

update_jacobi,

]

/home/vsts/work/1/s/simpeg/directives/_directives.py:1865: FutureWarning:

SaveEveryIteration.save_txt has been deprecated, please use SaveEveryIteration.on_disk. It will be removed in version 0.26.0 of SimPEG.

/home/vsts/work/1/s/simpeg/directives/_directives.py:1866: FutureWarning:

SaveEveryIteration.save_txt has been deprecated, please use SaveEveryIteration.on_disk. It will be removed in version 0.26.0 of SimPEG.

Running the Inversion#

To define the inversion object, we need to define the inversion problem and the set of directives. We can then run the inversion.

# Here we combine the inverse problem and the set of directives

inv = inversion.BaseInversion(inv_prob, directives_list)

# Run the inversion

recovered_model = inv.run(starting_model)

Running inversion with SimPEG v0.25.1.dev9+g471344c9a

================================================= Projected GNCG =================================================

# beta phi_d phi_m f |proj(x-g)-x| LS iter_CG CG |Ax-b|/|b| CG |Ax-b| Comment

-----------------------------------------------------------------------------------------------------------------

0 2.45e+02 2.81e+02 0.00e+00 2.81e+02 0 inf inf

1 2.45e+02 4.29e+01 2.20e-01 9.67e+01 7.91e+01 0 8 4.28e-04 3.38e-02

2 1.22e+02 7.72e+00 2.09e-01 3.33e+01 3.68e+01 0 8 7.21e-04 2.66e-02

Reached starting chifact with l2-norm regularization: Start IRLS steps...

irls_threshold 2.7315741327602128

3 1.22e+02 7.39e+00 2.02e-01 3.21e+01 7.92e+00 0 9 3.54e-04 2.81e-03

4 2.05e+02 1.26e+01 1.57e-01 4.48e+01 1.83e+01 0 8 2.40e-04 4.39e-03

5 1.58e+02 1.38e+01 1.61e-01 3.93e+01 6.54e+00 0 7 6.22e-04 4.07e-03

6 1.17e+02 1.33e+01 1.80e-01 3.43e+01 3.53e+00 0 7 4.66e-04 1.65e-03

7 8.75e+01 1.21e+01 1.91e-01 2.88e+01 3.51e+00 0 8 5.43e-04 1.91e-03

8 6.88e+01 1.07e+01 1.83e-01 2.33e+01 3.67e+00 0 8 2.34e-04 8.59e-04

9 6.88e+01 9.52e+00 1.52e-01 2.00e+01 5.72e+00 0 8 9.02e-04 5.16e-03

10 6.88e+01 8.61e+00 1.28e-01 1.74e+01 6.57e+00 0 9 5.36e-04 3.52e-03

11 1.09e+02 9.08e+00 8.68e-02 1.85e+01 1.89e+01 0 10 3.04e-04 5.73e-03

12 1.09e+02 8.81e+00 6.56e-02 1.60e+01 1.29e+01 0 10 5.47e-04 7.07e-03

13 1.71e+02 9.02e+00 4.23e-02 1.62e+01 3.24e+01 0 9 7.13e-04 2.31e-02

14 1.71e+02 8.46e+00 3.18e-02 1.39e+01 1.99e+01 0 10 3.01e-04 5.97e-03

15 2.71e+02 8.42e+00 2.20e-02 1.44e+01 3.76e+01 0 9 5.92e-04 2.23e-02

16 4.32e+02 8.47e+00 1.49e-02 1.49e+01 3.84e+01 0 9 5.67e-04 2.18e-02

17 6.88e+02 8.55e+00 9.94e-03 1.54e+01 4.19e+01 0 9 2.56e-04 1.07e-02

18 1.09e+03 8.64e+00 6.57e-03 1.58e+01 7.71e+01 0 9 3.07e-04 2.36e-02

19 1.72e+03 8.73e+00 4.33e-03 1.62e+01 3.47e+01 0 9 6.21e-04 2.15e-02

20 2.71e+03 8.82e+00 2.85e-03 1.65e+01 3.24e+01 0 9 5.43e-04 1.76e-02

21 4.24e+03 8.92e+00 1.87e-03 1.69e+01 3.22e+01 0 9 4.79e-04 1.54e-02

22 6.62e+03 9.01e+00 1.23e-03 1.72e+01 3.21e+01 0 9 5.08e-04 1.63e-02

23 6.62e+03 8.58e+00 8.73e-04 1.44e+01 1.25e+01 0 10 1.50e-04 1.87e-03

24 1.05e+04 8.49e+00 5.89e-04 1.47e+01 3.47e+01 0 9 9.38e-04 3.26e-02

25 1.66e+04 8.51e+00 3.92e-04 1.50e+01 3.33e+01 0 9 8.20e-04 2.73e-02

26 2.64e+04 8.56e+00 2.60e-04 1.54e+01 3.29e+01 0 9 7.89e-04 2.60e-02

27 4.18e+04 8.64e+00 1.71e-04 1.58e+01 3.27e+01 0 9 7.48e-04 2.44e-02

28 6.61e+04 8.73e+00 1.13e-04 1.62e+01 3.25e+01 0 9 6.73e-04 2.19e-02

29 1.04e+05 8.82e+00 7.41e-05 1.65e+01 3.23e+01 0 9 5.61e-04 1.81e-02

30 1.63e+05 8.92e+00 4.88e-05 1.69e+01 3.22e+01 0 9 4.85e-04 1.56e-02

31 2.54e+05 9.01e+00 3.21e-05 1.72e+01 3.21e+01 0 9 5.09e-04 1.63e-02

32 2.54e+05 8.58e+00 2.27e-05 1.44e+01 1.24e+01 0 10 1.50e-04 1.87e-03

Reach maximum number of IRLS cycles: 30

------------------------- STOP! -------------------------

1 : |fc-fOld| = 8.1161e-02 <= tolF*(1+|f0|) = 2.8196e+01

1 : |xc-x_last| = 1.2925e-01 <= tolX*(1+|x0|) = 1.2741e+00

0 : |proj(x-g)-x| = 1.2447e+01 <= tolG = 1.0000e-01

0 : |proj(x-g)-x| = 1.2447e+01 <= 1e3*eps = 1.0000e-02

0 : maxIter = 50 <= iter = 32

------------------------- DONE! -------------------------

Plotting Results#

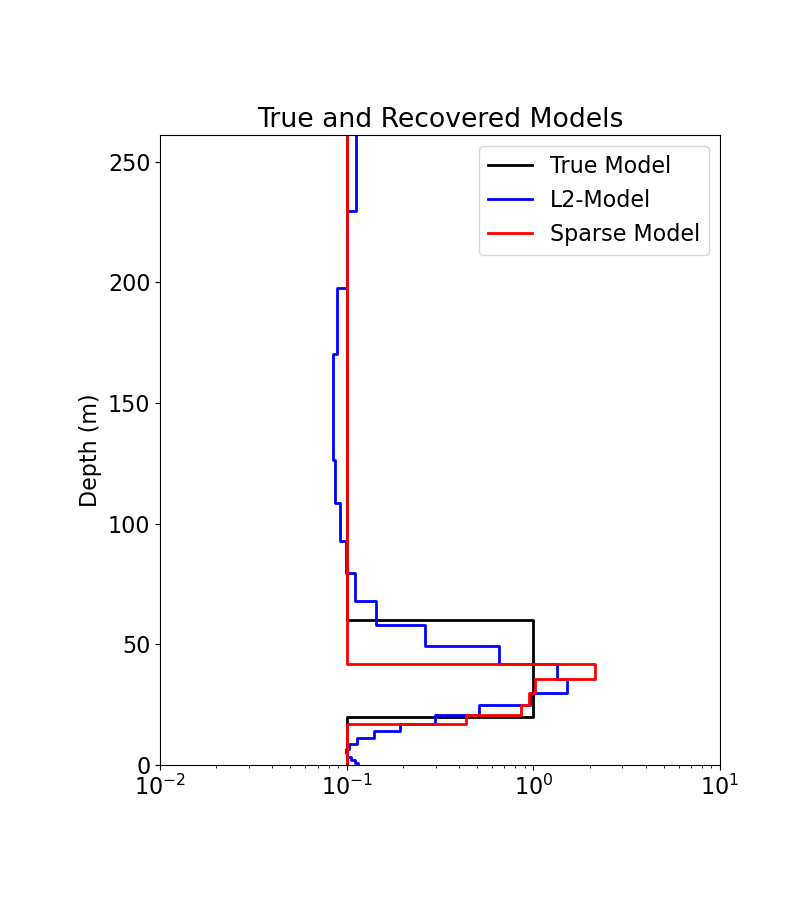

# Load the true model and layer thicknesses

true_model = np.array([0.1, 1.0, 0.1])

true_layers = np.r_[20.0, 40.0, 160.0]

# Extract Least-Squares model

l2_model = inv_prob.l2model

# Plot true model and recovered model

fig = plt.figure(figsize=(8, 9))

x_min = np.min(

np.r_[model_mapping * recovered_model, model_mapping * l2_model, true_model]

)

x_max = np.max(

np.r_[model_mapping * recovered_model, model_mapping * l2_model, true_model]

)

ax1 = fig.add_axes([0.2, 0.15, 0.7, 0.7])

plot_1d_layer_model(true_layers, true_model, ax=ax1, show_layers=False, color="k")

plot_1d_layer_model(

mesh.h[0], model_mapping * l2_model, ax=ax1, show_layers=False, color="b"

)

plot_1d_layer_model(

mesh.h[0], model_mapping * recovered_model, ax=ax1, show_layers=False, color="r"

)

ax1.set_xlim(0.01, 10)

ax1.set_title("True and Recovered Models")

ax1.legend(["True Model", "L2-Model", "Sparse Model"])

plt.gca().invert_yaxis()

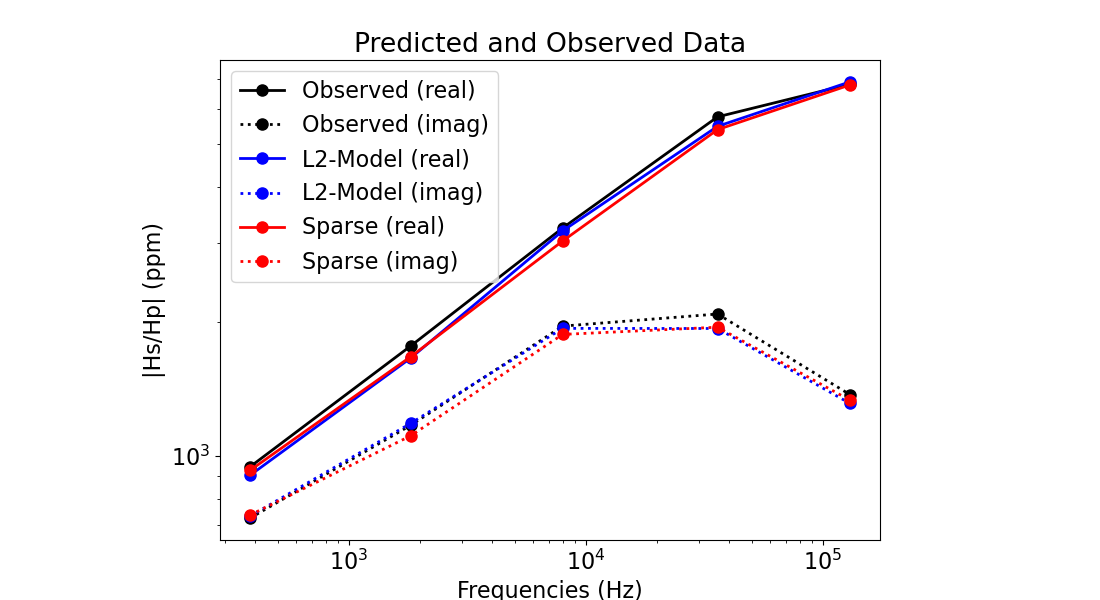

# Plot predicted and observed data

dpred_l2 = simulation.dpred(l2_model)

dpred_final = simulation.dpred(recovered_model)

fig = plt.figure(figsize=(11, 6))

ax1 = fig.add_axes([0.2, 0.1, 0.6, 0.8])

ax1.loglog(frequencies, np.abs(dobs[0::2]), "k-o")

ax1.loglog(frequencies, np.abs(dobs[1::2]), "k:o")

ax1.loglog(frequencies, np.abs(dpred_l2[0::2]), "b-o")

ax1.loglog(frequencies, np.abs(dpred_l2[1::2]), "b:o")

ax1.loglog(frequencies, np.abs(dpred_final[0::2]), "r-o")

ax1.loglog(frequencies, np.abs(dpred_final[1::2]), "r:o")

ax1.set_xlabel("Frequencies (Hz)")

ax1.set_ylabel("|Hs/Hp| (ppm)")

ax1.set_title("Predicted and Observed Data")

ax1.legend(

[

"Observed (real)",

"Observed (imag)",

"L2-Model (real)",

"L2-Model (imag)",

"Sparse (real)",

"Sparse (imag)",

],

loc="upper left",

)

plt.show()

Total running time of the script: (0 minutes 19.191 seconds)

Estimated memory usage: 321 MB